What a difference context makes...

Yesterday, I observed my student teacher's statistics lesson on confidence intervals using a t-distribution. We both agreed that the lesson went fairly well and without a doubt emphasized the main ideas needed to help students understand the day's learning objective:

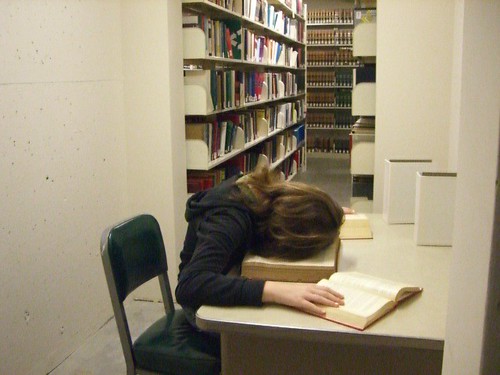

Construct and interpret confidence intervals for a population mean using t-tables when n < 30 and/or the population standard deviation is unknown.This topic is a common one taught in any introductory statistics class during the inferential statistics half of the syllabus. In fact, just the school day before, students were exposed to the underlying ideas behind confidence intervals and the difference between descriptive statistics and inferential statistics. After yesterday's lesson, my student teacher and I both lamented how tired and unresponsive the class as a whole was. No matter how many jokes he cracked or the stories told about the history and relevance of confidence intervals, the students were very subdued and content with the silence. Aside from a few strategies we discussed about alternative ways to engage the class, it inevitably seemed like one of those "it will hopefully be better tomorrow" conclusions.

Then, "after school" happened. A student who is not currently and has never previously enrolled in Statistics strolled into our room. For the sake of anonymity, we'll call her "Barbara." Barbara is working on her science fair project and her teacher suggested that she pay a visit to the resident statisticians (my student teacher and me) to critique her data analysis. (Note: I'm really excited that this type of interdisciplinary collaboration is becoming more common between Dawn and our statistics department - last week our class critiqued her class' data tables, graphs and charts for appropriate use of descriptive statistics) As it turns out, Barbara was testing the effect of several variables on a person's ability to run. Her averages, in seconds, were within one or two of each other. Based on her descriptive statistics toolkit (mean, median, mode and range), it appeared as though there was a meaningful difference between her variables due to the averages being separated by as much as two seconds. After all, to the average US citizen, a runner who has raced eight times with an average time of 23.5 seconds seems faster than another running who has also raced eight times with an average of 24.4 seconds, right?!

Enter inferential statistics and confidence intervals.

Over the next thirty minutes, my student teacher and I taught Barbara an abbreviated version of the same lesson he had taught earlier than afternoon to our statistics class. Barbara added standard deviation to her descriptive statistics toolkit and asked questions until she not only understood the idea behind confidence intervals, but also felt like she was able to explain it to someone else, a requirement for the science fair project. Barbara asked questions like "Would my intervals change if I increased the number of trials?" and "Why should I choose a 95% level of confidence instead of a 99% level?" She left the room satisfied and ready to add on to the data analysis piece of her project as well add more depth to her "results, discussion and conclusion" component based on her questions.

I am 95% confident that Barbara left the room with a deeper understanding of inferential statistics than those currently enrolled in the statistics course. What a difference context makes...